AI Chatbots Have Carefully Infiltrated Scientific Publishing

A person percent of scientific posts printed in 2023 showed symptoms of generative AI’s prospective involvement, according to a recent assessment

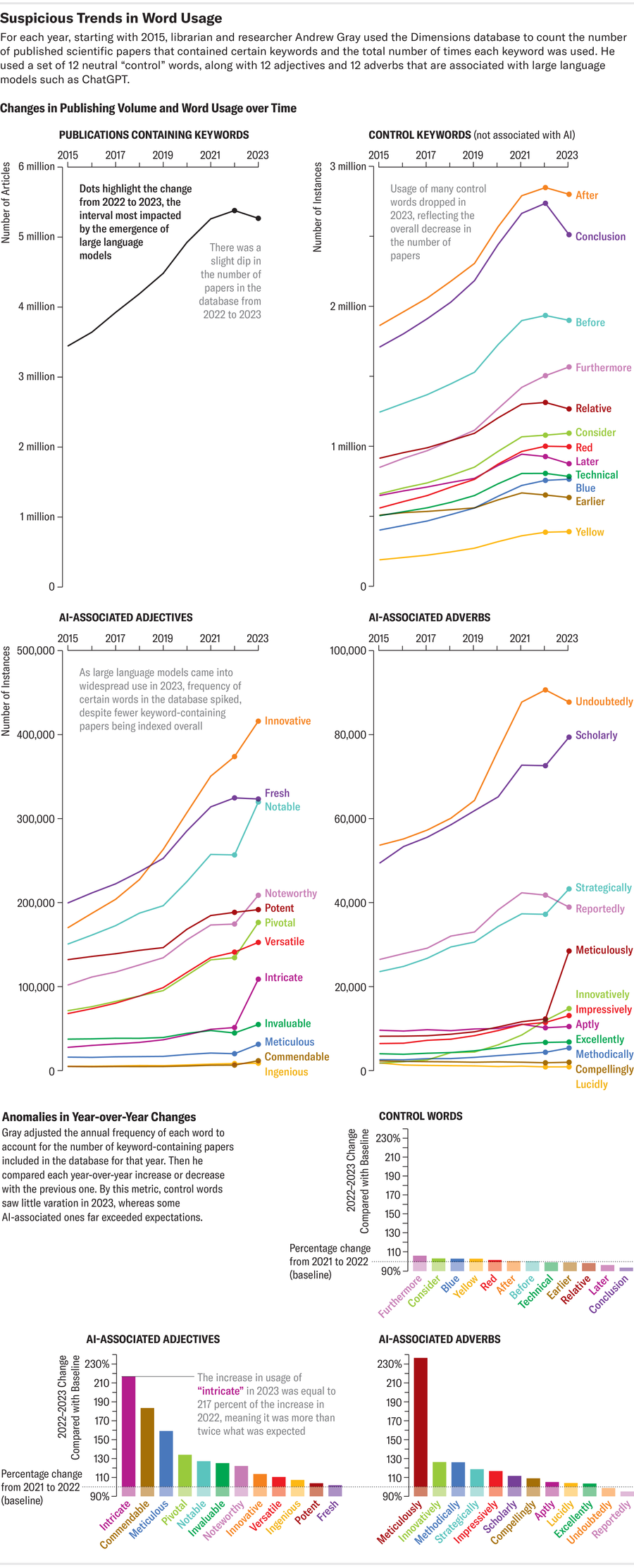

Amanda Montañez Source: Andrew Grey

Researchers are misusing ChatGPT and other artificial intelligence chatbots to make scientific literature. At the very least, that’s a new dread that some experts have raised, citing a stark rise in suspicious AI shibboleths displaying up in revealed papers.

Some of these tells—such as the inadvertent inclusion of “certainly, in this article is a achievable introduction for your topic” in a the latest paper in Surfaces and Interfaces, a journal printed by Elsevier—are fairly apparent evidence that a scientist made use of an AI chatbot regarded as a big language model (LLM). But “that’s almost certainly only the tip of the iceberg,” claims scientific integrity advisor Elisabeth Bik. (A consultant of Elsevier explained to Scientific American that the publisher regrets the predicament and is investigating how it could have “slipped through” the manuscript analysis course of action.) In most other instances AI involvement isn’t as distinct-reduce, and automatic AI text detectors are unreliable instruments for analyzing a paper.

Researchers from quite a few fields have, having said that, recognized a couple important text and phrases (this sort of as “sophisticated and multifaceted”) that are likely to appear more generally in AI-created sentences than in regular human composing. “When you’ve appeared at this stuff extended more than enough, you get a sense for the model,” suggests Andrew Gray, a librarian and researcher at University School London.

On supporting science journalism

If you happen to be savoring this short article, take into consideration supporting our award-successful journalism by subscribing. By purchasing a subscription you are encouraging to guarantee the foreseeable future of impactful tales about the discoveries and tips shaping our earth nowadays.

LLMs are developed to produce text—but what they create might or might not be factually correct. “The issue is that these resources are not great ample nevertheless to belief,” Bik suggests. They succumb to what laptop researchers simply call hallucination: just put, they make things up. “Specifically, for scientific papers,” Bik notes, an AI “will deliver quotation references that do not exist.” So if researchers place as well considerably self-confidence in LLMs, study authors risk inserting AI-fabricated flaws into their work, mixing far more potential for error into the previously messy fact of scientific publishing.

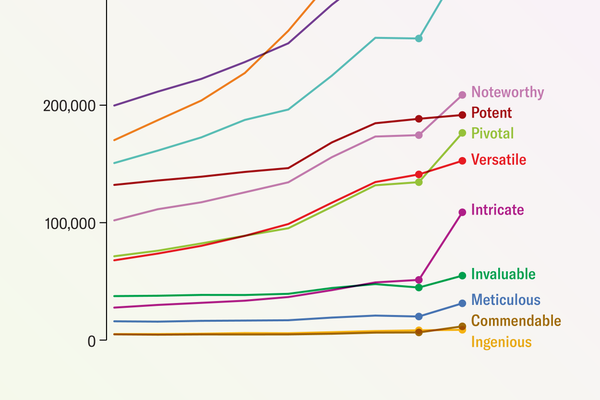

Grey recently hunted for AI buzzwords in scientific papers making use of Dimensions, a info analytics system that its developers say tracks far more than 140 million papers globally. He searched for words disproportionately used by chatbots, this sort of as “intricate,” “meticulous” and “commendable.” These indicator text, he states, give a better feeling of the problem’s scale than any “giveaway” AI phrase a clumsy creator may duplicate into a paper. At least 60,000 papers—slightly more than 1 % of all scientific content articles revealed globally last year—may have utilized an LLM, according to Gray’s examination, which was produced on the preprint server arXiv.org and has nevertheless to be peer-reviewed. Other scientific studies that concentrated especially on subsections of science suggest even a lot more reliance on LLMs. One particular these kinds of investigation located that up to 17.5 p.c of the latest computer science papers exhibit signals of AI writing.

Amanda Montañez Resource: Andrew Grey

All those conclusions are supported by Scientific American’s very own look for employing Dimensions and a number of other scientific publication databases, including Google Scholar, Scopus, PubMed, OpenAlex and Web Archive Scholar. This look for appeared for signals that can propose an LLM was involved in the creation of textual content for educational papers—measured by the prevalence of phrases that ChatGPT and other AI styles commonly append, these kinds of as “as of my past awareness update.” In 2020 that phrase appeared only at the time in outcomes tracked by four of the significant paper analytics platforms applied in the investigation. But it appeared 136 occasions in 2022. There ended up some constraints to this technique, nevertheless: It could not filter out papers that might have represented experiments of AI models on their own fairly than AI-produced material. And these databases incorporate substance further than peer-reviewed content articles in scientific journals.

Like Gray’s solution, this research also turned up subtler traces that could have pointed toward an LLM: it looked at the quantity of periods stock phrases or terms most popular by ChatGPT have been found in the scientific literature and tracked no matter whether their prevalence was notably unique in the years just ahead of the November 2022 release of OpenAI’s chatbot (likely back again to 2020). The findings counsel a little something has changed in the lexicon of scientific writing—a improvement that could possibly be prompted by the producing tics of significantly current chatbots. “There’s some evidence of some terms transforming steadily over time” as language ordinarily evolves, Gray suggests. “But there’s this problem of how significantly of this is prolonged-expression organic adjust of language and how much is a thing distinctive.”

Indicators of ChatGPT

For indications that AI may perhaps be associated in paper manufacturing or editing, Scientific American’s research delved into the term “delve”—which, as some casual monitors of AI-manufactured text have pointed out, has viewed an unconventional spike in use across academia. An assessment of its use throughout the 37 million or so citations and paper abstracts in life sciences and biomedicine contained in the PubMed catalog highlighted how considerably the word is in vogue. Up from 349 employs in 2020, “delve” appeared 2,847 times in 2023 and has now cropped up 2,630 occasions so considerably in 2024—a 654 per cent improve. Equivalent but much less pronounced increases ended up observed in the Scopus database, which handles a wider vary of sciences, and in Proportions information.

Other terms flagged by these displays as AI-produced catchwords have seen similar rises, in accordance to the Scientific American evaluation: “commendable” appeared 240 periods in papers tracked by Scopus and 10,977 situations in papers tracked by Dimensions in 2020. All those quantities spiked to 829 (a 245 per cent raise) and 20,536 (an 87 % maximize), respectively, in 2023. And in a most likely ironic twist for would-be “meticulous” investigation, that term doubled on Scopus involving 2020 and 2023.

Extra Than Mere Words and phrases

In a globe the place teachers reside by the mantra “publish or perish,” it’s unsurprising that some are using chatbots to help save time or to bolster their command of English in a sector wherever it is frequently needed for publication—and might be a writer’s 2nd or 3rd language. But using AI technology as a grammar or syntax helper could be a slippery slope to misapplying it in other elements of the scientific system. Composing a paper with an LLM co-author, the stress goes, may well direct to important figures generated full fabric by AI or to peer evaluations that are outsourced to automatic evaluators.

These are not purely hypothetical scenarios. AI unquestionably has been utilised to create scientific diagrams and illustrations that have frequently been provided in tutorial papers—including, notably, onebizarrely endowed rodent—and even to swap human contributors in experiments. And the use of AI chatbots might have permeated the peer-evaluate approach itself, primarily based on a preprint examine of the language in opinions presented to researchers who offered exploration at conferences on AI in 2023 and 2024. If AI-created judgments creep into educational papers together with AI textual content, that fears specialists, together with Matt Hodgkinson, a council member of the Committee on Publication Ethics, a U.K.-dependent nonprofit corporation that encourages ethical tutorial research techniques. Chatbots are “not great at performing examination,” he suggests, “and that is where the serious danger lies.”